In our exploration of ETL best practices, we’ve covered strategies like incremental loading, data validation, and transformation. But when dealing with truly large datasets, one of the most powerful techniques is parallel processing. Instead of handling data sequentially, parallel processing distributes workloads across multiple CPUs, machines, or nodes—dramatically improving speed and scalability.

What is Parallel Processing?

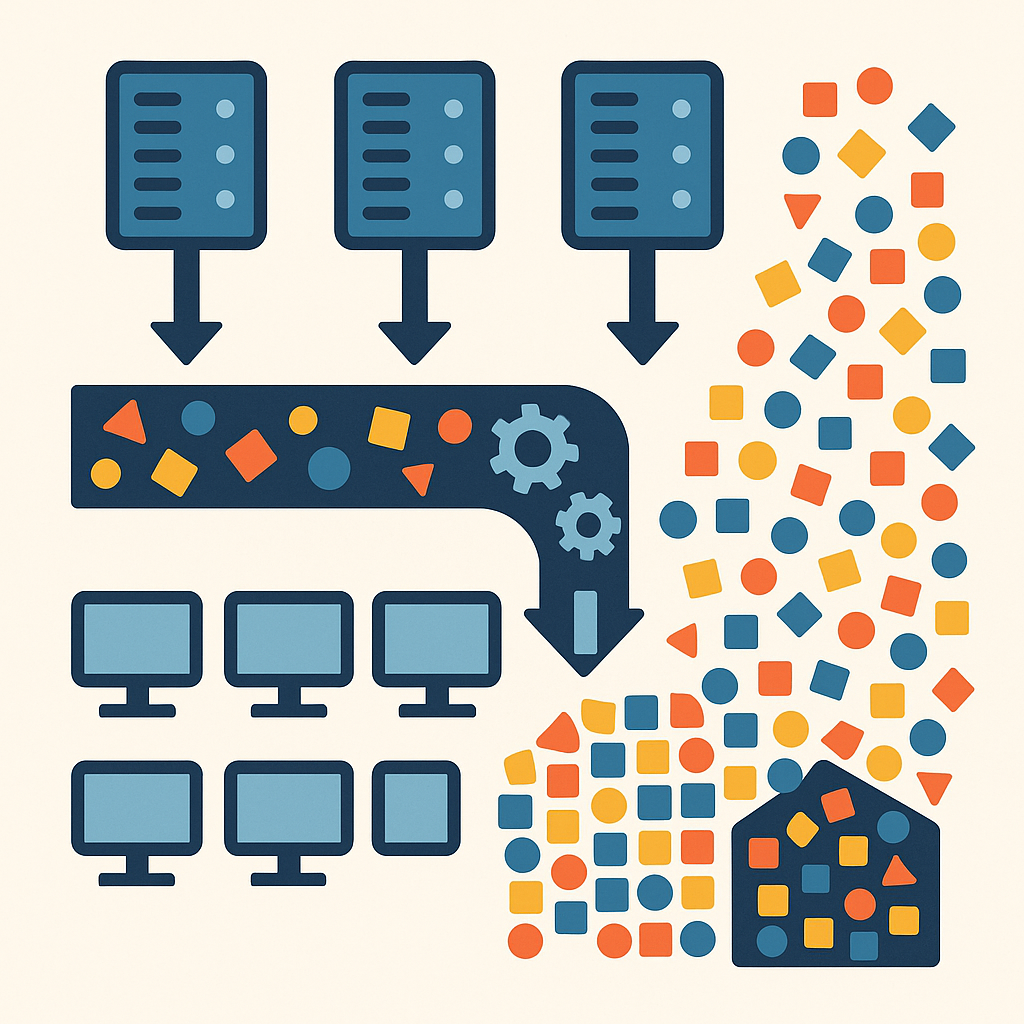

Parallel processing is the technique of splitting a large task into smaller chunks that can be executed simultaneously. In the context of ETL, this means breaking down extraction, transformation, and loading steps into parallel jobs, each handling a subset of the data.

Why Parallel Processing Matters for ETL

- Speed: Process millions or billions of rows much faster by distributing workloads.

- Scalability: Handle growing data volumes without exponentially increasing run times.

- Efficiency: Better utilization of hardware and cloud resources, especially in distributed environments.

- Resilience: Fault-tolerant frameworks can re-run only failed partitions instead of restarting entire jobs.

Parallel Processing Techniques

- Data Partitioning: Split datasets into partitions (e.g., by date, ID ranges, or hash keys) and process them in parallel.

- Pipeline Parallelism: Run extract, transform, and load steps simultaneously on different portions of data.

- Cluster Computing: Use distributed frameworks like Apache Spark or Hadoop to process data across multiple nodes.

- Cloud-native Scaling: Services like AWS Glue, Azure Data Factory, and Google Dataflow can auto-scale compute resources for parallel execution.

Tools That Enable Parallel Processing

- Apache Spark: In-memory distributed processing engine ideal for large-scale ETL and analytics.

- AWS Glue: Serverless ETL service that automatically distributes jobs across multiple nodes.

- Dask: Python library for parallel computing that integrates seamlessly with Pandas and NumPy.

- Hadoop MapReduce: Batch processing framework that pioneered large-scale distributed ETL.

Parallel processing turns ETL bottlenecks into opportunities for scale. With the right partitioning strategies and distributed tools, your pipelines can handle ever-growing datasets without breaking performance or budgets.